How to Reduce Bias in the Hiring Process: 12 Strategies

The challenges of addressing unconscious bias and reducing bias in the hiring process are real and essential to promoting fairness, diversity, and inclusivity. Hiring bias occurs when personal preferences or unconscious judgments influence hiring decisions, potentially leading to unfair outcomes and a lack of diversity within the workplace. These biases can manifest in various ways, from preconceived notions about a candidate’s background to subtle preferences for certain characteristics.

Handling red flags on a background check and addressing hiring bias requires a structured approach that ensures transparency and compliance with legal standards. Strategies such as incorporating Social Media Background Checks and using AI-powered background screening tools can streamline the process while promoting impartial and data-driven decision-making.

What is Hiring Bias?

Hiring bias happens when personal preferences, unconscious judgments, or stereotypes influence the hiring process, potentially resulting in unfair decisions and reduced workplace diversity.

Implicit bias refers to automatic and involuntary attitudes that influence perceptions and decisions. It relates to unconscious judgments and stereotypes, highlighting the need for awareness training to help individuals recognize and challenge these biases, particularly in hiring processes.

This bias can manifest in various ways, such as making assumptions based on a candidate’s background, appearance, or other unrelated factors, and can often occur without hiring managers even realizing it.

Examples include:

- Favoring candidates with similar educational or cultural backgrounds.

- Making decisions based on gender, race, age, or other demographic factors.

- Relying too heavily on gut feelings rather than objective criteria.

Candidate behavior, such as how they present themselves during interviews or online, can also affect perceptions, which may reinforce biases. However, adopting the right strategies—such as using standardized evaluation criteria, conducting blind resume reviews, and utilizing AI-powered tools—can significantly minimize these influences and create a fairer hiring process.

Strategies for Reducing Hiring Bias

The Society for Human Resources Management (SHRM) recommends adopting structured hiring processes to eliminate subjectivity in hiring. The Society for Human Resources Management (SHRM) suggests using structured interviews where candidates are asked the same set of predefined questions that focus on factors that directly impact job performance.

This structure helps minimize bias by taking out subjective factors such as ability or appearance. Implementing these strategies not only enhances workplace safety but also contributes to risk reduction during the hiring process. Here are 11 ways to effectively reduce hiring bias:

1. Implement Structured Interviews with Candidates Criteria

Standardized interview process for all candidates ensures that assessments are based on job-relevant factors rather than unrelated traits. This structured approach removes subjective judgments and focuses on qualifications and skills that impact job performance.

2. Use Blind Recruitment

Blind recruitment hides candidates' personal details—such as names, photos, and other potentially bias-triggering information—so that hiring decisions are based purely on skills, qualifications, and experience. This process helps prevent bias based on race, gender, or other irrelevant personal traits.

3. Standardize Job Descriptions and Interview Questions

Standardized job descriptions are an important step to make sure the hiring process is fair. Clear and neutral language in job descriptions and standardized interview questions help reduce bias by focusing on job-specific requirements and qualifications. This ensures that hiring managers evaluate candidates consistently based on the same set of criteria.

4. Incorporate Fair Social Media Screening

While social media screening can provide insights into a candidate's behavior and character, it must be done in a fair and consistent manner. Using tools like third party Social Media Background Checks ensures that all candidates are evaluated based on the same criteria, reducing the risk of bias in this area.

5. Utilize AI and Data-Driven Tools

By instantly analyzing thousands of applications based on the data, AI recruiting solutions can often reduce the effort of identifying top talent by filtering out the better candidates in a fraction of the time it would take a person to do so manually. AI helps eliminate human error and bias, allowing for data-backed decisions.

6. Conduct Unconscious Bias Training

Unconscious bias training aims to help employees recognize what unconscious bias is, how to avoid unconscious bias, how it can influence their choices and interactions, and how to reduce its effects at work. HR consultants often facilitate this training, raising awareness and encouraging fair decision-making during the hiring process.

7. Set Diversity Hiring Goals

Setting diversity hiring goals helps ensure that teams make intentional efforts to build a diverse and inclusive workforce by actively seeking out diverse candidates. By setting clear targets for diversity, companies can promote equal opportunities and reduce bias in the selection process.

8. Expand Talent Pools

To expand your talent pool, showcase your company culture with top talent, utilize social media for outreach, revamp your recruitment strategy, and consider executive search firms. Offer hybrid or remote work options, develop an employee referral program, and prioritize a unique and inclusive workplace.

These efforts bring in a wide range of applicants and can help counteract any biases that may arise from a narrow talent search.

9. Continuous Feedback and Review

Regularly reviewing hiring practices and seeking feedback ensures that strategies of reducing bias are being followed and improved upon as necessary. Implementing an HR assessment can help identify areas for enhancement, ensuring that the recruitment process remains effective and equitable.

10. Focus on Cultural Add, Not Fit

Rather than focusing on culture fit, organization leaders can concentrate on culture add to be inclusive. This approach encourages diversity by hiring individuals who bring unique perspectives rather than those who simply fit into the existing mold.

11. Use Diverse Interview Panels

Diverse hiring panels will also give a range of perspectives on how suitable a candidate is.

Interviewers from a range of backgrounds and demographics are likely to submit a wider range of questions as the interview is planned, which can highlight the benefits of different lived experiences. This reduces the risk of bias by incorporating multiple viewpoints.

12. Educate and Train Hiring Managers

Educating and training hiring managers is a crucial step in reducing unconscious bias in the hiring process. By providing comprehensive training programs, organizations can help hiring managers become more aware of their own biases and learn how to make more objective decisions. This training can also emphasize the importance of diversity and inclusion in the workplace and how to create a more inclusive hiring process.

Some key topics to cover in hiring manager training include:

- Understanding Unconscious Bias: Educate hiring managers on what unconscious bias is and how it can impact the hiring process.

- Recognizing and Overcoming Biases: Provide strategies for identifying and mitigating affinity bias, confirmation bias, and other types of biases.

- Creating Inclusive Job Descriptions: Teach hiring managers how to write job descriptions that are free from biased language and focus on essential qualifications.

- Using Standardized Interview Processes: Emphasize the importance of structured interviews and standardized questions to reduce bias.

- Evaluating Candidates Objectively: Train hiring managers to assess candidates based on objective criteria rather than subjective impressions.

- Fostering an Inclusive Workplace Culture: Highlight the benefits of diversity and inclusion and how hiring managers can contribute to a more inclusive environment.

By educating and training hiring managers, organizations can significantly reduce unconscious bias in the hiring process and create a more inclusive and diverse workplace.

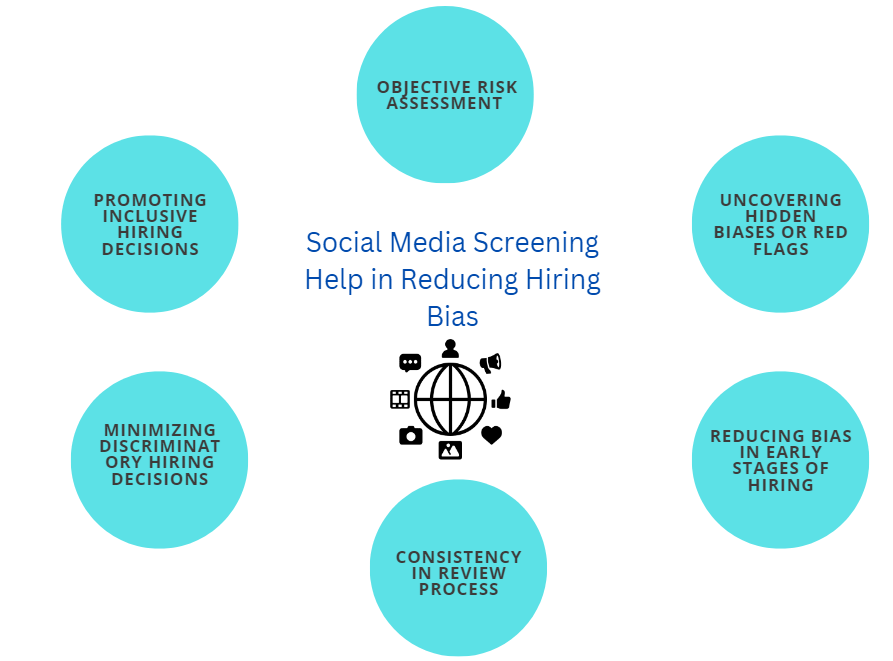

How Social Media Screening Will Help in Reducing Hiring Bias

Using third party social media screening tools can provide valuable insights and reduce bias in the hiring process. The importance of social media screening is highlighted by fact that most employers (91%) already check candidates' profiles as part of pre-hire evaluations. Pre-Hire and Post-Hire Social Media Screening helps assess applicants objectively, while also uncovering potential risks that may not appear in a traditional background check.

1. Objective Risk Assessment

AI-powered social media screening tools enable employers to assess candidates based on objective data from their social media profiles. These tools can analyze behavior patterns, interests, and interactions without the influence of subjective biases, such as personal preferences or preconceived notions.

This ensures that the evaluation is based on relevant, factual information that directly contributes to a candidate's risk profile and overall suitability for the job, rather than irrelevant traits or assumptions.

2. Uncovering Hidden Biases or Red Flags

Social media screening helps identify potential red flags or hidden biases of candidates that might not surface during traditional interviews or background checks. For example, a candidate's social media activity can reveal inappropriate behavior, discriminatory language, or personal attitudes that are not immediately visible through resumes or interviews.

AI tools can flag these issues while keeping the evaluation neutral, ensuring that hiring decisions are made based on comprehensive, transparent data rather than overlooked biases.

3. Reducing Bias in Early Stages of Hiring

When social media screening is conducted early in the hiring process, it helps reduce the chances of bias impacting the decision-making process. At this stage, employers can evaluate candidates based on their social media behavior and public interactions rather than making assumptions based on personal characteristics like appearance or name.

By relying on this data early on, employers can ensure that the initial stages of hiring are free from bias and based on objective qualifications and behavior.

4. Consistency in Review Process

AI-driven social media screening tools bring consistency to the interview process by applying the same criteria to all candidates. Every applicant is assessed under the same set of parameters, ensuring fairness in the process.

Unlike human evaluators, who may have unconscious biases that affect their judgment, AI tools are impartial and consistent, making the review process more standardized and reliable across all candidates.

5. Minimizing Discriminatory Hiring Decisions

AI-based social media screening helps minimize discriminatory hiring decisions by eliminating the influence of unconscious biases. For example, employers may unknowingly favor candidates who share similar characteristics to themselves, such as gender, ethnicity, or social circles.

Social media screening tools reduce this potential bias by focusing solely on candidates' public behaviors and qualifications. This leads to more equitable decision-making, ensuring that all candidates are evaluated fairly regardless of personal attributes.

6. Promoting Inclusive Hiring Decisions

AI-powered social media screening tools are designed to detect harmful behaviors—such as hate speech, discrimination, or harassment—without introducing the biases of the evaluator. This promotes a more inclusive hiring process by flagging inappropriate behavior without making assumptions about candidates based on personal characteristics like gender, race, or cultural background.

As a result, organizations can make hiring decisions that are more inclusive, selecting individuals who align with company values of respect, diversity, and inclusion.

By using social media screening tools, employers can reduce bias at multiple stages of the hiring process, from initial assessments to final decisions. This approach ensures a more fair, consistent, and inclusive evaluation of candidates, while also minimizing the risk of discriminatory practices.

Benefits of Reducing Hiring Bias for Businesses

Reducing hiring bias leads to a more diverse workforce, fostering innovation and creativity. Research shows that diverse teams outperform homogeneous ones. Social media screening is essential for modern businesses, helping to address workplace issues and maintain a safe environment.

- Better Team Performance: Different teams offer a wider range of ideas and perspectives.

- Increased Employee Satisfaction: this makes employees feel valued, boosting morale.

- Attraction of Top Talent: Companies committed to diversity draw exceptional candidates.

- Enhanced Company Reputation: A diverse workforce positively impacts the company's image.

- Lower Turnover Costs: It improves retention, reducing turnover expenses.

- Proactive Risk Management: Social media screening identifies potential red flags in candidates.

- Commitment to Best Practices: Reducing hiring bias supports ethical hiring, enhancing credibility.

By promoting diversity, businesses can drive growth and cultivate a positive workplace culture.

What are Types of Hiring Bias?

Hiring bias can take many forms, including but not limited to:

1. Affinity Bias

Affinity bias occurs when a hiring manager favors candidates who are similar to themselves in terms of background, interests, experiences, or personal traits. This unconscious bias often leads to the selection of individuals who share the same cultural, educational, or social characteristics.

For example, if a hiring manager is from a particular university or has similar hobbies to a candidate, they may unconsciously view that candidate as more competent or likeable, despite the candidate's qualifications. Affinity bias can limit diversity and overlook more qualified candidates from different backgrounds.

2. Confirmation Bias

Confirmation bias happens when a hiring manager looks for information that supports their existing beliefs or assumptions about a candidate, ignoring evidence that contradicts those beliefs. For instance, if a hiring manager has an initial negative impression of a candidate based on their resume or first impression, they may focus only on information that supports that view (such as a gap in the candidate's employment history) while dismissing positive aspects.

This bias can result in an unfair evaluation of candidates, often based on personal preconceived notions rather than objective qualifications or performance.

3. Gender Bias

Gender bias occurs when a hiring manager favors one gender over another. Gender bias can manifest through subtle or overt actions, such as assuming that women are less suited for leadership positions or technical roles or perceiving men as more competent in specific areas.

It is equally important to eliminate biases related to sexual orientation during the hiring process to foster diversity and inclusivity.

The Importance of Reducing Bias in the Hiring Process

Reducing bias in the hiring process is essential for creating a diverse and inclusive workplace. When bias is present, it can lead to the unfair treatment of qualified candidates and negatively impact the quality of hires. Additionally, bias can introduce risks to an employer’s reputation and bottom line.

Some key reasons why reducing bias in the hiring process is important include:

- Ensuring Fairness and Equity: A bias-free hiring process ensures that all candidates are evaluated based on their qualifications and skills, promoting fairness and equity.

- Attracting and Retaining Top Talent: Companies that are committed to reducing bias are more likely to attract and retain top talent, as candidates seek employers who value diversity and inclusion.

- Creating a Diverse and Inclusive Workplace Culture: Reducing bias helps build a diverse workforce, which can lead to a more innovative and creative workplace.

- Reducing Legal Risks: A fair hiring process minimizes the risk of legal consequences related to discrimination claims.

- Improving Employee Morale and Retention: Employees are more likely to feel valued and satisfied in a workplace that prioritizes fairness and inclusivity, leading to higher retention rates.

By reducing bias in the hiring process, organizations can create a more inclusive and diverse workplace, ultimately improving their overall performance and fostering a positive workplace culture.

How Ferretly Will Help in Reducing Hiring Bias with Social Media Screening

Ferretly's AI-driven screening solutions reduce hiring bias by evaluating candidates based on objective data. By also using human social media analysts to verify reports, Ferretly helps ensure that only relevant, job-related information is considered in hiring decisions.

This approach also helps in reducing workplace violence risk and minimizes employee turnover by identifying potential issues before they escalate.

Also, it can help organizations avoid disciplinary action related to hiring mistakes. Click here to learn more about how Ferretly can assist your organization in reducing bias.

Frequently Asked Questions

1. What is blind recruitment?

Blind recruitment is a strategy where identifying details like names and photos are removed to ensure a focus on skills and experience.

2. Can technology and AI help reduce hiring bias?

Yes, AI tools like Ferretly provide unbiased evaluations of candidates based on data, minimizing the influence of human biases.

3. What Is Social Media Screening?

The process of reviewing publicly available information from social media sites, providing an insight into the candidate's background and character. This is crucial for understanding their suitability for the role outside of interviews and traditional background checks.

4. How can companies monitor and measure bias in their hiring process?

Regular audits, feedback systems, and AI-based tools can help companies track and reduce bias over time.

5. How does reducing bias improve workplace diversity?

By ensuring a fair hiring process, companies can attract a wider range of candidates, build a stronger workplace culture, improve employuee retention and reduce workplace violence risk.